How to Use Large Language Models (LLMs) safely and securely

Late in 2022, ChatGPT made its debut and dramatically changed the world. Within 5 days, it had reached 1 million users – an unprecedented adoption rate for any consumer application since the dawn of the Internet. Since then, many companies have started to think much bigger about what AI could mean for their customers and the wider world.

Here at Equal Experts we’ve seen a growing number of our customers evaluate how they can take advantage of this powerful technology. Business leaders are also rightly concerned about how to harness it safely and securely. In this article, we’ll explore some of the risks associated with adopting an LLM and discuss how these can be safely managed.

What is an LLM?

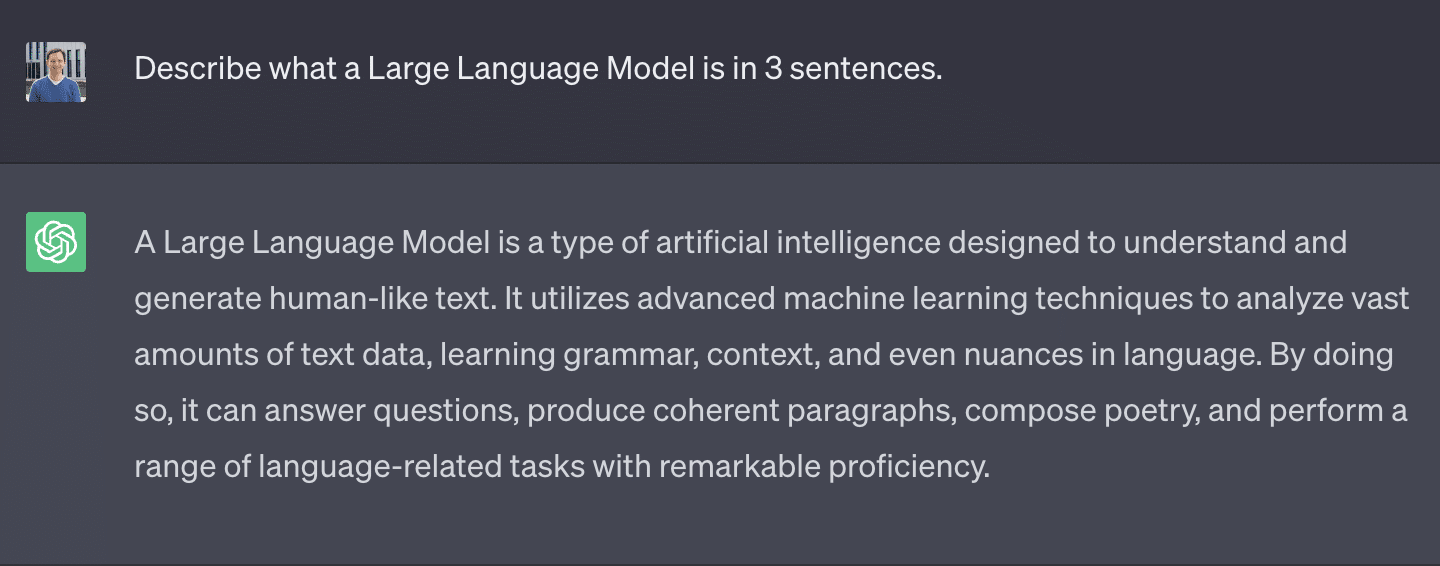

So what exactly is a large language model? Let’s see what ChatGPT has to say about that:

Follow that up with a question about what some common uses are for LLMs, and you’ll get a long list of suggestions ranging from content generation and customer support to professional document generation for medical, scientific and legal fields, as well as creative writing and art. The possibilities are immense!

Note: The above section is the only part of this article written with the assistance of an LLM!

When it comes to securing these kinds of systems, the good news is that we’re not starting from scratch. Decades of security research are still relevant, although there are some emerging security practices that you need to be aware of.

Tried and tested security practices are still important

Securing LLM architectures does not mean throwing out everything we know about security. The same principles we’ve been using to design secure systems are still relevant and equally important when building an LLM-based system.

As with many things, you have to get the basics right first – and we encourage you to start strong with security foundations such as least privilege access, secure network architecture, strong IAM controls, data protection, security monitoring, and secure software delivery practices such as those described in our Secure Delivery Playbook. Without these foundations in place, you risk building LLM castles on sand, and the cost and complexity in retrofitting foundational security controls is very high.

When it comes to LLM architectures, building in tried and tested security controls is critical, but not sufficient. LLMs bring with them some unique security challenges that need to be addressed. Security is contextual; there is no one-size-fits-all solution. So what do those LLM-specific security concerns look like?

New security practices are emerging

This is a new area of research that’s still undergoing a lot of change, but there are consistent themes across the industry around some of the major areas to focus on. You should see these as an LLM-specific security layer on top of the foundational security controls we described earlier. In many cases, these are not entirely new practices; instead, they are facets of security that we’ve been thinking about for a long time but are now being observed through a different lens.

Governance

Security governance models need to be updated to incorporate AI-specific concerns. This defines clear roles & responsibilities and sets expectations on everyone in the organisation, and provides a mechanism to help you to maintain suitable security standards over time. Simon Case, Head of Data at Equal Experts, has written a great article describing data governance.

Data governance and security governance need to work hand-in-hand. A strong AI security governance model should provide clear guidance to engineering teams in the kinds of security risks they need to protect against and security principles they need to follow, allowing them to adopt the best controls to meet those needs.

Some areas to consider when defining your governance approach include:

- Model usage: What is the organisation’s policy on using SaaS models (e.g. OpenAI) vs self-hosted models? What are the data protection and regulatory implications of these decisions?

- Data usage: Does the organisation permit AI-based solutions on PII or commercially-sensitive data? Do you allow use of customer data in LLM development?

- Privacy and ethics: What use cases are acceptable for LLMs? Is automated decision making allowed, or is human oversight required? Can an LLM be exposed to customers or is it exclusively for internal use?

- Agency and explainability: How do you ensure users can trust the decisions / outputs from AI systems? Are AI systems permitted to make decisions autonomously or is human intervention required?

- Legal: What laws and regulations apply, and what internal engagement is needed with company legal teams when designing and building an LLM-based solution?

Delivery

The secure development practices that have become commonplace over the past few years remain applicable, but now need to be reexamined in light of new AI-specific threats. For example:

- Secure by design: Do your security teams have the right knowledge of AI-based systems to guide engineering teams towards a secure design?

- Training data security: Where is your training data sourced from? How can you validate the accuracy and provenance of that data? What controls do you have in place to protect it from leaks or poisoning attacks?

- Model security: How are you protecting against malicious inputs, such as prompt injection? Where is your trained model stored, and who has access to it?

- Supply chain security: What checks are in place to validate the components used in the system? How are you ensuring isolation and access control between components and data?

- Testing: Are you conducting adversarial testing against your model? Have your penetration testers got the right skills and experience to assess LLM-based systems?

Operations

Monitoring an LLM-based system requires that you understand the new threats that come into play, including threats to the underlying data as well as the abuse of the LLM itself (e.g. leading to undesirable actions or output). Some areas to consider are:

- Response accuracy: How do you ensure the model continues to produce accurate results? Can you detect model abuse that taints outputs? How do you detect and correct model drift?

- Abuse detection: Can you identify abusive inputs to the system? Can you identify when model outputs are being used for harm? Do you have incident response plans to protect against these situations?

- Pipeline security: Have you threat-modelled your delivery pipelines? Are they monitored as production systems?

- AI awareness: Do your security operations teams understand AI systems sufficiently to monitor them? Have you updated your security processes to factor in changes with AI?

Do remember this is a very new field and the state of the art is changing all the time. It’s important to understand that the industry’s knowledge of the threats and countermeasures is evolving, so will need constant attention throughout the lifecycle of your LLM-based product. There are many useful guides and frameworks to draw on when defining your own organisation-specific approach to LLM adoption, such as Google’s Secure AI Framework. I would encourage you to invest time researching these when defining your own AI adoption plans.

How can I use LLMs safely within my business?

Given how new this technology is, we would recommend your first LLM-based project is focused on an internal use case. This allows you to get familiar with the technology in a safe environment before looking to adopt it for more ambitious goals. For example, we’ve seen teams evaluate LLMs to improve internal platform documentation, making it easier for product teams to onboard to the platform while reducing the support burden on the platform team. This particular example provides an excellent proving ground for LLMs because:

- Users are all employees with a common interest in improving the system

- There is no direct customer impact

- Undesired LLM output can be monitored and feedback loops designed to improve and correct model behaviour

- The model is trained on a well-defined set of high quality documentation, free from commercially sensitive data or PII

- The system has no ability to take autonomous actions on other systems

This is a fast-moving area with constant improvements, but there are sound principles for ensuring secure adoption.

Conclusion

Start with a strong foundation built on secure software engineering principles & practices, ensure you know exactly how you’re using LLMs and what data could be processed, and apply reasonable security controls for the new and emerging threats.

How can Equal Experts help?

LLMs are not a technology to fear or avoid. They’re a powerful new capability that should be pursued cautiously, with a well thought out plan that takes all the relevant security issues into account. The approach we encourage at Equal Experts is to conduct a discovery to identify the most valuable problems to be solved. Once you’ve tested your ideas and agreed on a particular problem to solve with an LLM, consider running an inception to align everyone on the team and de-risk delivery. Of course, security should be at the forefront of the conversation throughout this process. We have extensive experience in all of these areas and would love to help you get started in this new field. If you’re exploring ideas around LLMs, do get in touch.

In our recent Operationalising ML Playbook we discussed the most common pitfalls during MLOps. One of the most common pitfalls? Failing to implement appropriate secure development at each stage of MLOps.

Our Secure Development playbook describes the practices we know are important for secure development and operations and these should be applied to your ML development and operations.

In this blog we will explore some of the security risks and issues that are specific to MLOps. Make sure you check them all before publishing your model into production.

In machine learning, systems use example data to try to learn something – which may be output as a prediction or insight. The examples used to train ML models are known as training datasets, and security issues can be broadly divided into those affecting the model before and during training, and those affecting models that have already been trained.

Vulnerability to data poisoning or manipulation

One of the most commonly discussed security issues in MLOps is data poisoning – this is an attack where hackers attempt to corrupt or manipulate the data used for training ML models. This might be by switching expected responses, or adding new responses into a system. The result of data poisoning is that data confidentiality and reliability are both damaged.

When data for ML models is collected from online sources from sensors or online sources, the risk of data poisoning can be extremely high. Attacks can include label flipping (data is poisoned by changing labels in data) and gradient descent attacks (where the ability of a model to understand how close it is to predicting the correct answer is damaged by either making the model falsely believe it’s found the answer, or by preventing it from finding the answer by constantly changing that answer).

Exposure of data in the pipeline

You will certainly need to include data pipelines as part of your solution. In some cases they may use personal data in the training. Of course these should be protected to the same standards as you would in any other development. Ensuring the privacy and confidentiality of data in machine learning models is critical to protect against data extraction attacks and function extraction attacks.

Making the model accessible to the whole internet

Making your model endpoint publicly accessible may expose unintended inferences or prediction metadata that you would rather keep private. Even if your predictions are safe for public exposure, making your endpoint anonymously accessible may present cost management issues. A machine learning model endpoint can be secured using the same mechanisms as any other online service.

Embedding API Keys in mobile apps

A mobile application may need specific credentials to directly access your model endpoint. Embedding these credentials in your app allows them to be extracted by third parties and used for other purposes. Securing your model endpoint behind your app backend can prevent uncontrolled access.

As with most things in development, it only takes one person to neglect MLOps security to compromise the entire project. We advise organisations to create a clear and consistent set of governance rules that protect data confidentiality and reliability at every stage of an ML pipeline.

Everyone in the team needs to agree on the right way to do things – it only takes one leak or data attack for the overall performance of a model to be compromised.

The software delivery process has been transformed in the last decade, with the adoption of well-understood paths that incorporate functions such as testing, release management and operational support.

These changes have enabled organisations to speed up time to market and improve service reliability. So why does security still feel so hard for so many product teams?

In our Secure Delivery playbook, we explain the principles and practices you can apply to security within continuous delivery. We’ll also explain the security pitfalls that we encounter with customers, and how you can avoid them. The top five software delivery security challenges we’ve identified are:

- Security gate bottlenecks

- Disruptive chunks of security work

- Lack of tangible value

- Unsuitable security policies and checklists

- Inefficient duplication of effort

1. Security Gate Bottlenecks

Product teams need to roll out new digital services to customers as quickly as possible, to gain rapid feedback and iterate on their minimum viable product. However, too often this process is held up by weeks or months, because of the need for lengthy risk assessments and penetration tests. These checks are carried out by an overloaded security team that doesn’t have the capacity to meet multiple iterative launch deadlines.

You can reduce this bottleneck by embedding security assurance ownership into product teams. If product teams understand what assurance is required from a security assessment, then they can proactively provide it through code scanning, threat modelling and just-in-time security controls. This means security teams can change their role from fully assessing each release to simply checking the provided evidence.

The success statement you’re looking for is:

“We no longer wait for security teams to sign off that our release is secure, we provide security scan results and documented security controls in an agreed format which they quickly verify and use for assurance”

2. Disruptive chunks of security work

It can be difficult to plan for the outcome of large, infrequent security reviews or audits. Teams are often required to take on large chunks of remedial security or compliance work, which can lead to combative negotiations, disruption and widespread risk acceptance.

An effective way to reduce this disruption is by adopting security standards and controls earlier, in consultation with security teams. This means defining a clear set of standardised security requirements that are based on data sensitivity and system architecture. Additionally, shifting security requirements left towards the beginning of the delivery lifecycle means that product teams can design in controls from the start.

This looks like:

“We knew from product inception that our payment service would require service to service authentication and the encryption of card details. We planned this from the beginning and used agreed mechanisms that led to a very smooth delivery.”

3. Lack of tangible value

Too often, security activities such as code scanning, risk assessment and remedial work are carried out with little discernible value, apart from being able to say, “We did what we needed to to pass security sign off”. This makes it difficult to justify security activities against product needs, and to measure their value.

This can be improved by measuring and owning security performance effectively. If you understand how security activities can be measured and used to provide assurance evidence, then you can prove vulnerabilities are fixed within timelines or that important security controls are widely adopted. This also means you can help teams take responsibility for owning security improvements and prioritising work.

“Using our security dashboards, as a delivery owner I can demonstrate that 100% of my critical systems have granular database permissions, are regularly scanned for vulnerabilities against the OWASP top 10, and 95% of any vulnerabilities found are fixed within 14 days.”

4. Unsuitable security checklists

It’s important to define a set of technical security requirements and standards if you want to scale out security assurance effectively. However, in many cases the result is hundreds of questions in a spreadsheet, based on old and high-level industry standards. Such checklists are created in isolation by security teams, and rarely updated. This leads to security activities that are unsuitable for fast-changing tech stacks and modern design paradigms, such as microservices, serverless and composable front-ends.

You can improve on this by co-developing useful, relevant and up-to-date security standards. Ensure standards are co-developed and co-owned by product and security teams, and regularly review and extend them to keep up with new technology or innovative system architectures.

This looks like:

“Our security standards are seen as a valuable design toolkit for engineers and architects that provide early indication of security requirements and act as a blueprint when implementing security controls. ”

5. Inefficient duplication of effort

Security scans and/or penetration tests for multiple digital services often find the same vulnerabilities over and over again, with multiple product teams carrying out the same remedial work or accepting the same business risks. This results in duplicated, expensive work and/or increased security risk from cross-cutting control deficiencies that are too big for any one team to fix.

You can remove the duplication with:

- Focused penetration testing. You should review penetration test findings globally, and move towards in-depth, regular testing and scanning on cross-cutting areas such as web front-ends or shared infrastructure, while reducing testing on individual microservices.

- In-depth remediation efforts. Tackle shared vulnerabilities by carefully standardising shared components such as base VM or container images, shared framework libraries or reverse-proxies, and fixing them regularly so teams get fixes “for free”.

This looks like:

“We no longer pen test each new customer-facing website component before release, instead we test the whole site in-depth every 3 months and since we standardised our web server configuration we no longer receive hundreds of duplicated findings.”

In upcoming blog posts, I’ll go into more detail on each of these pitfalls with some useful concrete techniques to avoid them. So, follow Equal Experts on Twitter and LinkedIn for updates!