How we ended up creating language agnostic data pipelines for our customers at Equal Experts

Based on the experience shared in evolving a client’s data architecture, we decided to share a reference implementation of data pipelines. Recalling from the data pipeline playbook.

What is a Data Pipeline?

From the EE Data Pipeline playbook:

A Data Pipeline is created for data analytics purposes and has:

- Data sources – these can be internal or external and may be structured (e.g. the result of a database call), semi-structured (e.g. a CSV file or a Google Sheet), or unstructured (e.g. text documents or images).

- Ingestion process – the means by which data is moved from the source into the pipeline (e.g. API call, secure file transfer).

- Transformations – in most cases data needs to be transformed from the input format of the raw data, to the one in which it is stored. There may be several transformations in a pipeline.

- Data Quality/Cleansing – data is checked for quality at various points in the pipeline. Data quality will typically include at least validation of data types and format, as well as conforming against master data.

- Enrichment – data items may be enriched by adding additional fields such as reference data.

- Storage – data is stored at various points in the pipeline. Usually at least the landing zone and a structured store such as a data warehouse.

Functional requirements

- Pipelines that are:

- Easy to orchestrate

- Support scheduling

- Support backfilling

- Support testing on all the steps

- Easy to integrate with custom APIs as sources of data

- Easy to integrate in a CI/CD environment

- The code can be developed in multiple languages to fit each client skill set when python is not a first class citizen.

Our strategy

In some situations a tool like Matillion, Stitchdata or Fivetran can be the best approach, although it’s not the best choice for all of our client’s use cases. These ETL tools work well when using the existing pre-made connectors, although when the majority of the data integrations are custom connectors, it’s certainly not the best approach. Apart from the known cost, there is also an extra cost when using these kinds of tools – the effort to make the data pipelines working in a CI/CD environment. Also, at Equal Experts, we advocate we should test each step of the pipeline, and if possible, develop them using test driven development – and this is near impossible in these cases.

That being said, for the cases when an ETL tool won’t fit our needs, we identified the need of having a reference implementation that we can use for different clients. Since the skill set of each team is different, and sometimes Python is not an acquired skill, it was decided not to use the well known python tools that are used these days for data pipelines like Apache Airflow or Dagster.

So we designed a solution using Argo Workflows as the orchestrator. We wanted something which allowed us to define the data pipelines as DAGs like Airflow.

Argo Workflows is a container-native workflow engine for orchestrating parallel jobs on Kubernetes. Argo represents workflows as Dags (Directed Acyclic Graphs), and each step of the workflow is a container. Since data pipelines can be easily modeled as workflow it is a great tool to use. Also, we have freedom to choose which programming language to design the connectors or the transformations, the only requirement is that each step of the pipeline should be containerised.

For the data transformations, we found that dbt was our best choice. Dbt allows the transformations needed between the staging tables and the analytics tables. Dbt is SQL centric, so there isn’t a need to learn another language. Also, dbt has features that we wanted like testing and documentation generation and has native connections to Snowflake, BigQuery, Redshift and Postgres data warehouses.

With these two tools, that is how we ended up with a language agnostic data pipelines architecture that can be easily reused and adapted in multiple cases and for different clients.

Reference implementation

Because we value knowledge sharing, we have created a public reference implementation of this architecture in the github repo which shows a pipeline for a simple use case of ingesting UK COVID-19 data (https://api.coronavirus.data.gov.uk) as an example.

The goal of the project is to have a simple implementation that can be used as an accelerator to other teams. It can be easily adapted to make other data pipelines, to integrate in a CI/CD environment, or to extend the approach and make it work for different scenarios.

The sample project uses a local kubernetes cluster to deploy Argo and the containers which represent the data pipeline. Also a database where COVID-19 data is loaded and transformed and an instance of Metabase to show the data in a friendly dashboard.

We’re planning to add into the reference implementation infrastructure as code to deploy the project on AWS and GCP. Also, we might also work in aspects like facilitating the monitoring of the data pipelines when deployed in a cloud, or using Great Expectations.

Transparency is at the heart of our values

We value knowledge sharing and collaboration, so we hope that this article, along with the data pipelines playbook will help you to start creating data pipelines in whichever language you choose.

Contact us!

For more information on data pipelines in general, take a look at our Data Pipeline Playbook. And if you’d like us to share our experience of data pipelines with you, get in touch using the form below.

It is common to hear that ‘data is the new oil,’ and whether you agree or not, there is certainly a lot of untapped value in much of the data that organisations hold.

Data is like oil in another way – it flows through pipelines. A data pipeline ensures the efficient flow of data from one location to the other. A good pipeline allows your organisation to integrate new data sources faster, provide patterns that you can replicate, gives you confidence in your data quality, and builds in security. But, data flow can be precarious and, when not given the correct attention, it can quickly overwhelm your organisation. Data can leak, become corrupted, and hit bottlenecks and, as the complexity of the requirements grow, and the number of data sources multiplies, these problems increase in scale and impact.

About this series

This is part one in our six part series on the data pipeline, taken from our latest playbook. Here we look at the very basics – what is a data pipeline and who is it used by? Before we get into the details, we just want to cover off what’s coming in the rest of the series. In part two, we look at the six main benefits of a good data pipeline, part three considers the ‘must have’ key principles of data pipeline projects, and parts four and five cover the essential practices of a data pipeline. Finally, in part six we look at the many pitfalls you can encounter in a data pipeline project.

Why is a data pipeline critical to your organisation?

There is a lot of untapped value in the data that your organisation holds. Data that is critical if you take data analysis seriously. Put to good use, data can identify valuable business insights on your customers and your operations. However, to find these insights, the data has to be regularly, or even continuously, transported from the place where it is generated to a place where it can be analysed.

A data pipeline, consolidates data from all your disparate sources into one (or multiple) destinations, to enable quick data analysis. It also ensures consistent data quality, which is absolutely crucial for reliable business insights.

So what is a data pipeline?

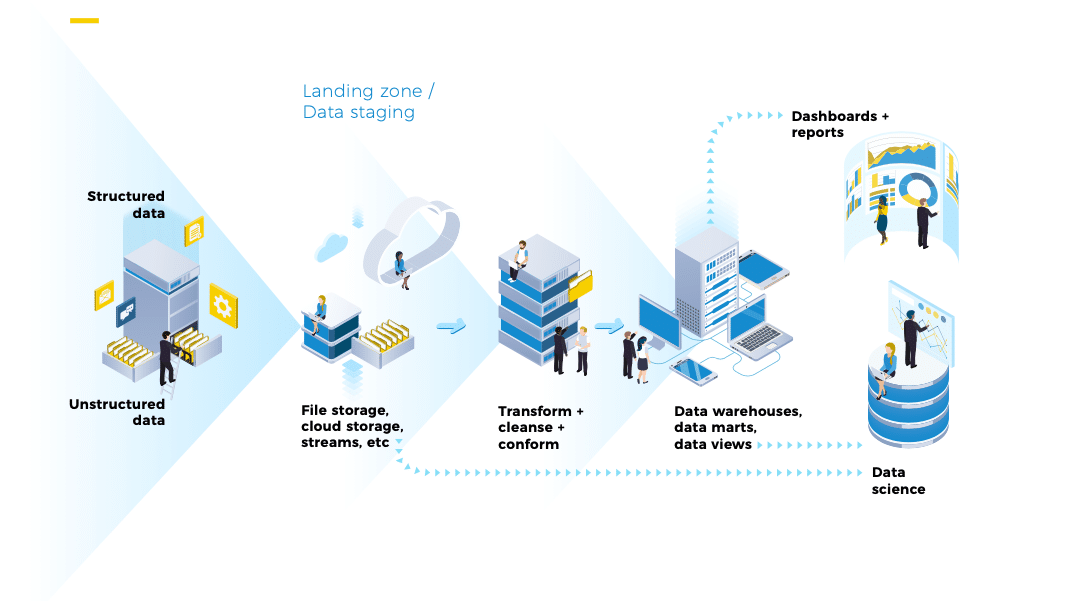

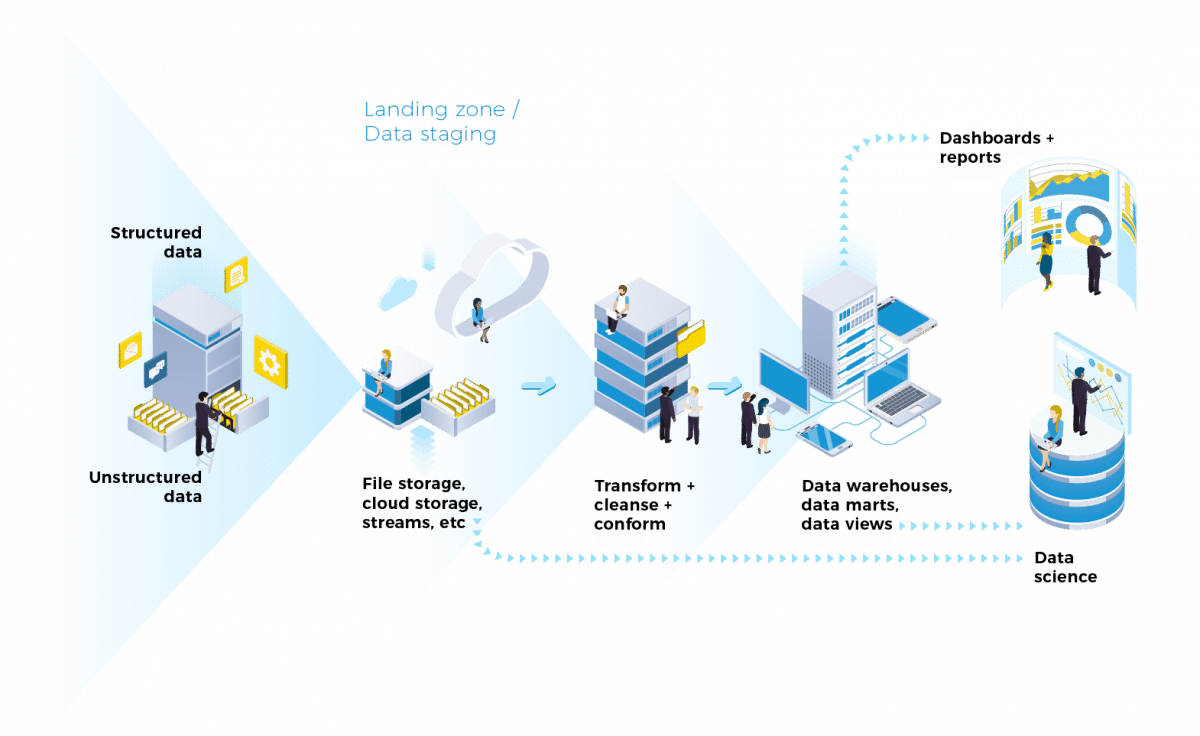

A data pipeline is a set of actions that ingest raw data from disparate sources and move the data to a destination for storage and analysis. We like to think of this transportation as a pipeline because data goes in at one end and comes out at another location (or several others). The volume and speed of the data are limited by the type of pipe you are using and pipes can leak – meaning you can lose data if you don’t take care of them.

The data engineers who create a pipeline are a critical service for any organisation. They create the architectures that allow the data to flow to the data scientists and business intelligence teams, who generate the insight that leads to business value.

A data pipeline is created for data analytics purposes and has:

Data sources – These can be internal or external and may be structured (e.g., the result of a database call), semi-structured (e.g., a CSV file or a Google Sheets file), or unstructured (e.g., text documents or images).

Ingestion process – This is the means by which data is moved from the source into the pipeline (e.g., API call, secure file transfer).

Transformations – In most cases, data needs to be transformed from the input format of the raw data, to the one in which it is stored. There may be several transformations in a pipeline.

Data quality/cleansing – Data is checked for quality at various points in the pipeline. Data quality will typically include at least validation of data types and format, as well as conforming with the master data.

Enrichment – Data items may be enriched by adding additional fields, such as reference data.

Storage – Data is stored at various points in the pipeline, usually at least the landing zone and a structured store (such as a data warehouse).

End users – more information on this is in the next section.

So, who uses a data pipeline?

We believe that, as in any software development project, a pipeline will only be successful if you understand the needs of the users.

Not everyone uses data in the same way. For a data pipeline, the users are typically:

Business intelligence/management information analysts, who need data to create reports;

Data scientists who need data to do an in-depth analysis of point problems or create algorithms for key business processes (we use ‘data scientist’ in the broadest sense, including credit risk analysts, website analytics experts, etc.)

Process owners, who need to monitor how their processes are performing and troubleshoot when there are problems.

Data users are skilled at visualising and telling stories with data, identifying patterns, or understanding significance in data. Often they have strong statistical or mathematical backgrounds. And, in most cases, they are accustomed to having data provided in a structured form – ideally denormalised – so that it is easy to understand the meaning of an individual row of data without the need to query separate tables or databases.

Is a data pipeline a platform?

Every organisation would benefit from a place where they can collect and analyse data from different parts of the business. Historically, this has often been met by a data platform, a centralised data store where useful data is collected and made available to approved people.

But, whether they like it or not, most organisations are, in fact, a dynamic mesh of data connections which need to be continually maintained and updated. Following a single platform pattern often leads to a central data engineering team tasked with implementing data flows.

The complexities of meeting everyone’s needs and ensuring appropriate information governance, as well as a lack of self-service, often make it hard to ingest new data sources. This can then lead to backlog buildup, frustrated data users, and frustrated data engineers.

Thinking of these dataflows as a pipeline changes the mindset away from monolithic solutions, to a more decentralised way of thinking – understanding what pipes and data stores you need and implementing them the right way for that case whilst reusing where appropriate.

So now we have understood a little more about the data pipeline, what it is and how it works, we can start to understand the benefits and assess whether they align with your digital strategy. We cover these in the next blog article, ‘What are the benefits of data pipelines?’

For more information on the data pipeline in general, take a look at our Data Pipeline Playbook. And if you’d like us to share our experience of the data pipeline with you, get in touch using the form below.