Our Data Health Check service recently helped one Equal Experts client to save more than $3 million. Why not find out if we could help you to realise the full potential of your organisation’s data?

May 31, 2023https://www.equalexperts.com/blog/ai/adventures-in-fine-tuning-chatgpt/

How do you fine tune ChatGPT to give you the best possible results? Find out what happened as we adventured further into using the latest AI chatbot.

February 2, 2023https://www.equalexperts.com/blog/ai/adventures-in-how-to-use-chatgpt-can-it-answer-questions-about-my-business/

What’s your take on ChatGPT, the newest AI chatbot everyone is talking about? Here’s what happened when we used ChatGPT to answer questions about Equal Experts, and how we fine-tuned the results.

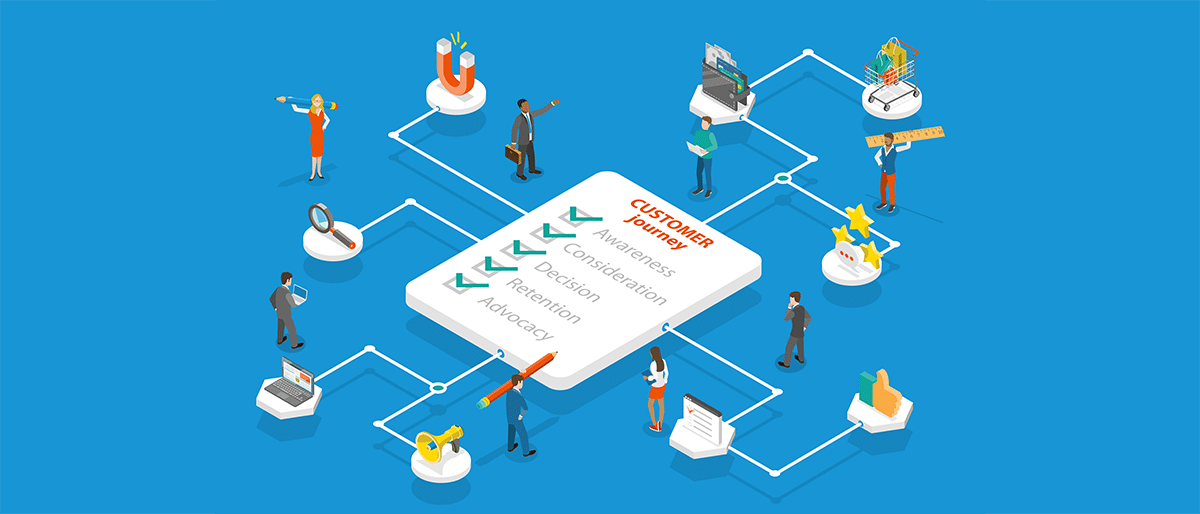

January 31, 2023https://www.equalexperts.com/blog/our-thinking/how-to-generative-research-steps/

When we invest time and money in creating new solutions, how do we know we’re solving the right problem? Generative research helps us to understand the problems before we develop solutions.

January 13, 2023