On 19-20 October 2025, AWS had a 15 hour outage. The trigger was a DNS issue in DynamoDB in its us-east-1 region. The outage had a significant worldwide impact, affecting over 1000 companies and millions of users, because:

- us-east-1 core services depend on DynamoDB

- us-east-1 contains core AWS orchestration systems (the control plane)

- us-east-1 is the default AWS region for many businesses

The other cloud providers have also had notable outages recently, such as GCP in June and Azure in October, soon after AWS. We’ve worked with customers to mitigate these cloud outages, and we’ve relearned some lessons I’d like to share.

Lesson 1: Multi-cloud isn’t the answer

Multi-cloud means running the same workload in two different cloud providers. Whenever there’s a cloud provider outage, there’s a surge of multi-cloud hot takes that running your business in two clouds will protect you from one outage.

We’ve built multi-cloud solutions with customers before, and it’s always come with higher costs and higher risks than expected. Cloud provider services are proprietary, data synchronisation is hard, and every layer of complexity adds more development time, operational costs, and failure points. We often refer to Corey Quinn’s (in)famous Multi-Cloud Enlightenment quote:

“You didn’t eliminate your single point of failure; you lovingly nurtured two of them”.

Multi-cloud is incredibly expensive and introduces a level of complexity that actually makes predictable, high reliability operations much harder. Our advice is: don’t do multi-cloud unless you’re compelled to do it, for regulatory or legal reasons.

Lesson 2: Multi-region probably isn’t the answer

Multi-region is running the same workload in two different geographic regions in one cloud provider. While multi-region isn’t as complex or challenging as multi-cloud, it’s not always as attractive as it appears. A failure in Azure, AWS, or GCP core infrastructure has the potential to affect all regions, as shown by the recent AWS outage

During a major outage, multi-region systems may retain access to their data, if it’s actually stored in the region. However, until the major outage is resolved it’s likely that workload will limp along, and its ability to heal, scale, or failover will be compromised.

Multi-region also dramatically increases cloud costs compared to single-region. You’re paying a full copy of all compute and storage resources in two regions. The cost of replicating data across AWS regions is significantly higher than the low cost of transferring data within a single-region, so database changes can quickly become a significant factor in a multi-region bill.

Our advice is: don’t do multi-region unless the extra costs are justified by your ability to keep data and services in sync across regions, and you have a thorough understanding of what core cloud provider services you’re dependent on.

Lesson 3: Know your Cloud Provider

Certain considerations are the same for all cloud providers. Costs, laws affecting data, and resource availability will all vary by region. In particular, resource availability needs a lot of thought, as larger regions often have much more capacity for unpredictable scaling needs, like one-off peak business periods.

However, whichever provider you choose will have its own quirks. We usually see:

- New features: AWS releases new features to us-east-1 first, Azure to East US 2, whereas GCP goes global.

- Outage patterns: AWS outages typically start in one region and cascade to others, whereas Azure and GCP outages have historically been linked to an instantaneous global failure

- Disaster Recovery: AWS and GCP allow to choose regions based on your own criteria, while Azure has pre-determined region pairings

Our advice is: don’t choose a cloud provider without understanding the trade-offs in different quirks. Understand common and individual provider behaviours and make an informed decision about where to run your services.

Lesson 4: Stopping deployments doesn’t stop incidents

It’s a common misconception that deployments are the main cause of incidents. We’ve published a public analysis that showed most incidents are triggered by surprise events, not planned changes.

Our advice is: don’t decrease deployments and expect reliability to improve for the medium or long term. We would actually suggest doing more deployments. Increasing deployments means smaller change sets to test, smaller time windows to observe, and a smaller probability of each change introducing faults. If it’s a peak business period, of course you’ll want to apply different guidelines.

Lesson 5: People forget how to restore service

Werner Vogels, Amazon’s CTO, famously stated, “Everything fails all the time” It’s a truth that underscores the inevitability of failure, regardless of which cloud provider you use.

If we accept this inevitability that things will fail in both single and multi-cloud setups, then how can we build reliable systems? The key here is to focus on optimising for resilience rather than robustness: Instead of investing extensive time and resources in multi-cloud implementations, it’s more important that your organisation anticipates failures, optimising your ability to recover from them, quickly and reliably.

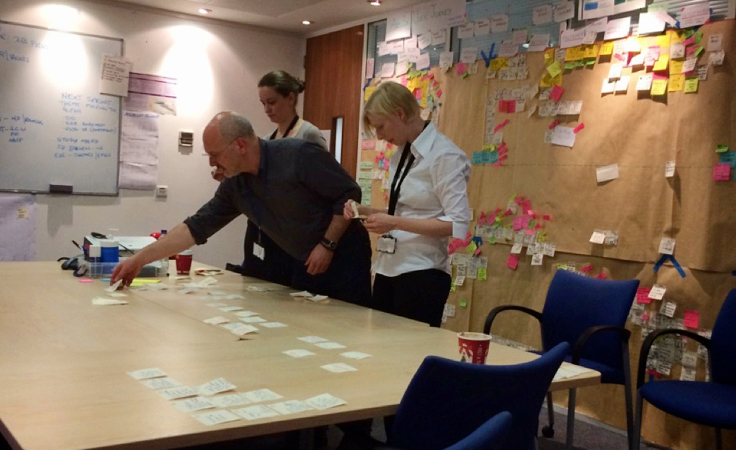

Chaos days are a great way to do this. They provide a crucial means of testing both your standard operating procedures (SOPs) and your team’s ability to execute them accurately under pressure. This practice is excellent for identifying weak recovery points and refining your response strategies, improving your mean time to recovery (MTTR). You can find more information about chaos days in our Chaos Day Runbook.

Our advice here is: Implement the simplest possible cloud implementation to support your needs. Learn your system’s failure modes, develop SOPs which allow you to recover them quickly and reliably. And use regular chaos days to hone your responses.

Conclusion

Your cloud provider will have major outages. They still provide orders of magnitude better availability than the on-premise data centres.

Cloud complexity can reduce reliability: Multi-region rarely justifies the marginal resilience gains, and multi-cloud should only be used when there is legal or regulatory requirement to do so. In all other cases we recommend a single-cloud approach.

Whatever your cloud approach, we recommend running regular chaos days to hone the practice of failure recovery, optimising for resilience over robustness and transforming inevitable outages into mere blips. Reliability engineering is about embracing failure and learning from it, so the cost and duration of failure is incrementally improved.

About the author

Edd’s expertise spans software engineering, technical architecture, and high-performing organisational culture. Drawing on experience as the architect of HMRC’s digital tax platform, he provides strategic guidance in complex, multi-team environments.

Edd is passionate about building capabilities from the ground up, prioritising openness and learning to ensure delivery success is achieved through alignment between culture and code. He shares this experience with engagement teams and clients across the USA, UK, and Europe. Connect with Edd on LinkedIn.