Organisations across the board are racing to embed AI into software delivery. Licences are purchased; at town halls, leaders are full of enthusiasm about transformation; and dashboards fill with optimistic adoption metrics, as if licence activity equated to progress. For a few weeks, usage soars. Then it fades.

Developers experiment in isolation. Some quietly find value; others quietly give up. Leaders wait for productivity gains that never seem to arrive. One client summed it up perfectly:

“We’re doing everything you told us not to do – and yes, it’s not working.”

So what’s going wrong?

It’s not the AI tools.

It’s the way organisations are trying to adopt them.

Why traditional approaches fail

Organisations are approaching generative AI as if it is a technological transformation:

- Launch proofs of concept to test the AI tools

- Send people to prompt-engineering courses

- Standardise the tools available

These activities feel productive, but they rarely result in actual product delivery: proofs of concept test capability but don’t always reflect the realities of production; prompt engineering teaches syntax, but not judgement; and procurement cycles move slower than the product landscape they’re designed to evaluate.

Meanwhile, innovators in the organisation bump into existing bottlenecks, governance, and lengthy decision-making processes. Skeptics who haven’t yet experienced the benefits of AI observe the friction and feel validated – AI doesn’t work for us!

The underlying problem isn’t technical – it is cultural.

A proven pattern for effective change: The Lighthouse approach

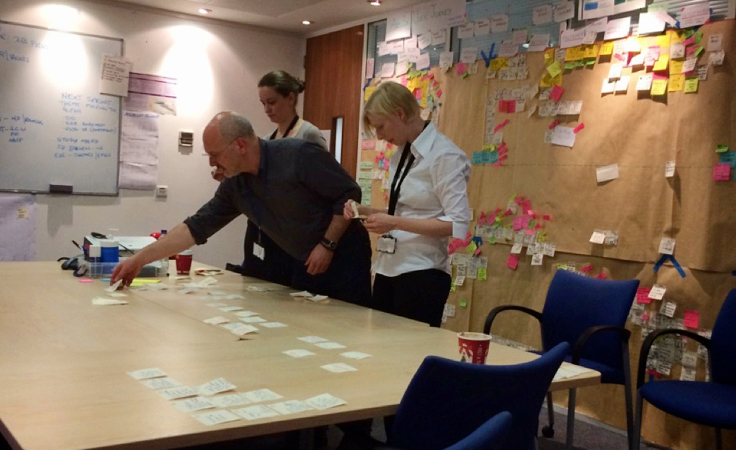

In the software development life cycle (SDLC), AI is an emerging practice; to be successful, we need to allow experimentation and allow repeatable patterns to emerge. A lighthouse team will do exactly that – it is a small, visible team doing real work to demonstrate what good looks like, so the rest of the organisation can learn from it.

A Lighthouse project acts as a beacon; not a pilot, not a lab experiment, but a scaled-down version of the future you want to create.

A good Lighthouse is:

- Real work – proofs of concept don’t work.

- Winnable – confidence grows from seeing early, measurable success.

- Public – visible enough that other teams can learn from it.

Behind each successful Lighthouse lies a principle we call the “innovation budget” – a reminder that not everythig should change at once. Choose one area to experiment with (testing, migration, documentation, context engineering) and keep the rest stable. This prevents chaos and helps meaningful patterns emerge.

A practical guide: How to run a Lighthouse

- Identify a meaningful, winnable slice of work

The Lighthouse must deliver something the business cares about: a feature, a service enhancement, a migration step. It cannot be a synthetic test or a playground. It should also be delivered in a short timeframe

- Design an SDLC that fits the work (and the experiment)

The SDLC needs to be tailored to both the type of work and the AI-enabled techniques you want to explore. Legacy migration, greenfield development, and feature delivery each require different rhythms. Think of the Lighthouse SDLC as a temporary operating model designed to learn, evolve, and stabilise.

Just as agile taught us to continuously inspect and adapt, a Lighthouse requires a similar rhythm of continuous improvement. The first version won’t be perfect, it’s a living system that evolves with every learning cycle.

- Form a small, cross-functional team embedded in the business context

A team of three to five people with a closely involved business stakeholder is the ideal setup. AI-enabled delivery tightens the feedback loop between business and technology. When both groups share context, the whole system moves faster.

- Build capability in context engineering

Instead of spending the majority of your efforts on crafting prompts, the real skill is managing the context – a curated collection of documentation, architecture decisions, coding standards, WGLL templates etc. – all the information the AI needs to execute the task accurately. Good output depends on good input – useful results come from well-curated and up-to-date information.

- Stay transparent and share what you learn

Each Lighthouse documents its process in a playbook that others can use as a starting point – what you tried, what worked, and what didn’t. As the next team progresses using the playbook, they can adjust it based on their local context and learning.

Scaling through practice, not policy

Once the first Lighthouse proves value – a reference point for “what good looks like” – other teams can begin adopting the approach, using the lessons you’ve learned.

Over time patterns will stabilise and new SDLC shapes will emerge as standards for different work types. Teams gain clarity on their innovation budget, understanding where to experiment and where established processes should be followed. Context is no longer tacit, it is managed and versioned, treated with the same rigour as code. What emerges is a deliberate, highly frictionless way of working, grounded in demonstrable evidence.

The gap between technology and business outcomes narrows and AI becomes a natural part of everyday delivery, rather than a hopeful experiment.

Want help starting a Lighthouse?

If your AI adoption feels busy but not productive, a Lighthouse is a practical, low-risk way to create clarity and results. If you’d like support designing or running one, we’d love to hear from you.

About the Author

Liis’s expertise spans software delivery and organisational change. With experience across product and delivery roles, she brings practical insight in helping organisations navigate complex technical and cultural transformations. She is passionate about exploring how AI will fundamentally transform software delivery and reshape operating models, working with clients across Europe to develop frameworks that align technology adoption with team capabilities and business outcomes. Connect with Liis on LinkedIn.